September 10, 2025Use your fingerprints to unlock your phone, not a code or face.

November 19, 2025Well, could they? What are the protections on your ChatGPT sessions?

The notification pinged on my phone. A news alert: "AI Chatbot Data Breach Fears Grow." My breath hitched. The article detailed cybersecurity experts’ warnings about the potential for vast amounts of user data to be compromised.

It mentioned the ease with which personal information, creative ideas, and even sensitive conversations could be extracted. My fingers trembled as I scrolled through the piece, each paragraph a fresh wave of dread.

It could happen.

Just about every large organization has had corporate data and user accounts and emails hacked. It could happen to OpenAI who runs ChatGPT as well.

A billion people have typed their hearts out to ChatGPT. Some of these thoughts and dreams could be very sensitive. Some people would be very very concerned if these chats were ever leaked.

Not just personal thoughts, but personal tragedies, stories of extreme bad relationships, serious issues of mental health, and contemplation of crimes or social attacks.

Of course, people do this because they trust the social networks and AI companies. In some sense, a billion people using ChatGPT means everyone is almost completely anonymous. Who would find my troublesome chats in a haystack of a billion people?

The AI companies need lots of human-generated text to train their models. Every time they create a new model it is a better model in part because of the saved history of chats from the last model.

The use of chats as "training data" recycles your thoughts and the responses are feed for the next generation of AI chatboxes.

In fact, most corporations pay extra to ChatGPT and competitors for a guarantee their employee's chats are not going to be recycled.

This means people who use it for free are not customers but unpaid suppliers of their stories, problems, and situations. For the AI industry to use and profit from.

If your aren't paying for ChatGPT (or Gemini or Claude or any of hundreds of others) then you almost never get to control how your data is used.

Even if you do pay, typically $20 a month, almost no one remembers to find the "settings" value to turn off the recycling of your data.

This is the control problem. Nobody wants to understand how much trouble they can get into if their AI conversations get leaked.

This is especially true for people who are "public figures" such as artists, politicians, comedians, corporate leaders, political figures, or anybody else with a public reputation.

Even if their reputation is already poor, a leak could effectively bury them in a cascade of reputational damage and conflict.

"Redress" is a remedy to an undesirable or unfair situation.

While most search engines (Google, Bing, X, YouTube) have a "forget me" feature, AI chatbots don't. The "forget me" feature allows the owner of a website to ask Google and others to remove a page or pages from their search results.

There is no retroactive way to tell ChatGPT to "forget" all the chats. Especially if you didn't sign up and just used it anonymously.

Neither can you sue. (Unless you are the New York Times.)

The AI companies will deny having the capacity to delete "your" data, or will claim that you gave them the right to use it (in a 14 page privacy agreement) and they won't revoke their rights.

Another argument is that they have (US-based) right of free speech.

Another argument is they want to avoid censorship and therefore do not want people censoring their own content, even if it is very indirect or derivative.

Fighting trillion-dollar companies with lawyers is not a winning tactic.

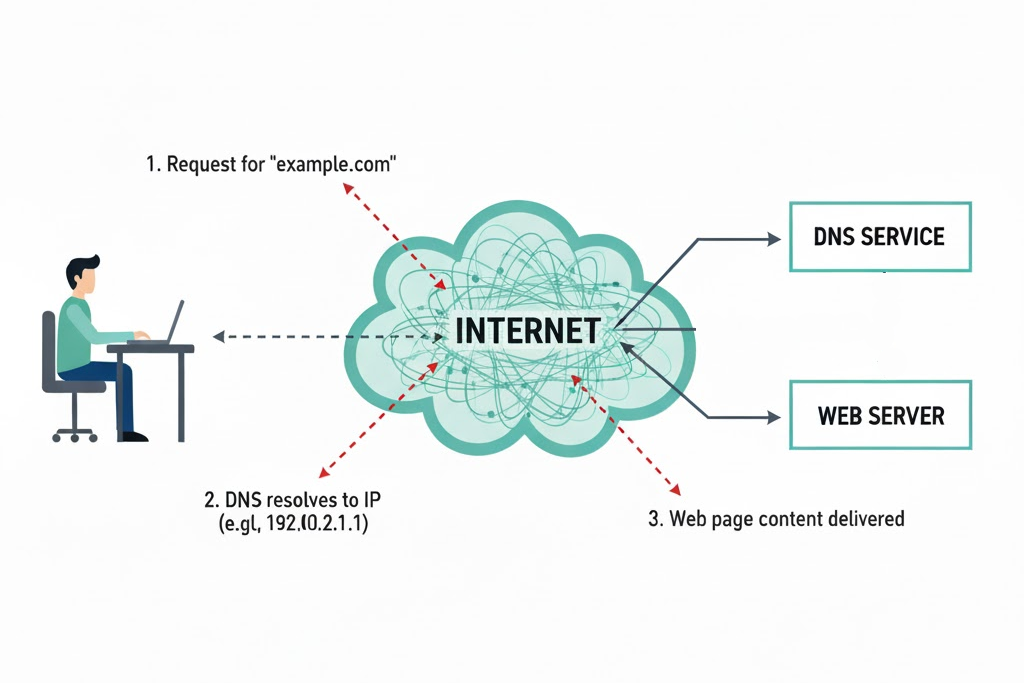

Instead, use privacy-focussed AI services: you must be sure your conversations with their AI are private and cannot be disclosed. Like a VPN service that claims they don't record details of every VPN session.

A more expensive option is to run your own AI service on your own laptop or PC. This requires a PC or Mac with large memory (64GB) and many-core CPU and is not exactly cheap. The software and AI model files are free, however.

With some knowledge, and perhaps some money, you can privatize your AI capabilities and not have to play the corporate risk games.

The notification pinged on my phone. OpenAI has just confirm that my data was not "exfiltrated", whatever that means. My stomach relaxed. My neck relaxed. I was safe.

For now.

That is all.

Latest Articles

September 10, 2025Use your fingerprints to unlock your phone, not a code or face.

October 14, 2025Knowing how passwords are stored helps motivate you to make and keep good passwords.

September 24, 2025 updated January 14, 2026Six different ways to list the software apps installed on your computer.

November 19, 2025Well, could they? What are the protections on your ChatGPT sessions?

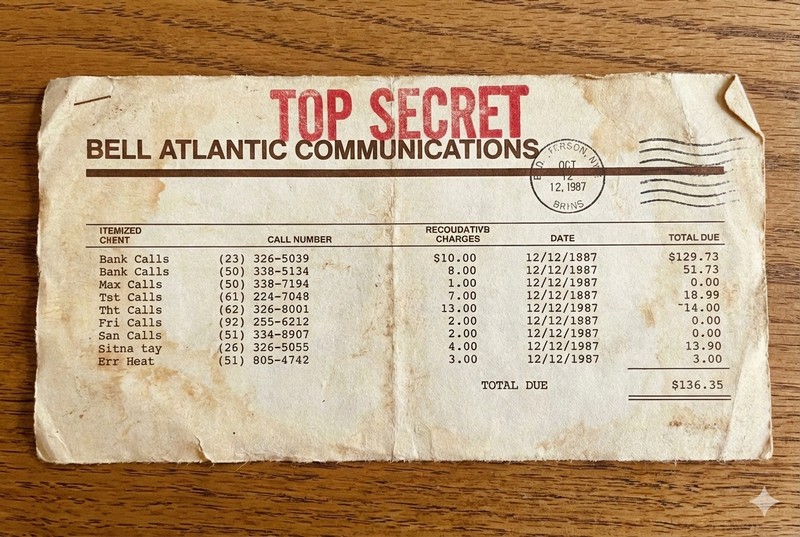

January 14, 2026Why is my telephone bill a secret? We explain the risks of people seeing your phone bill.

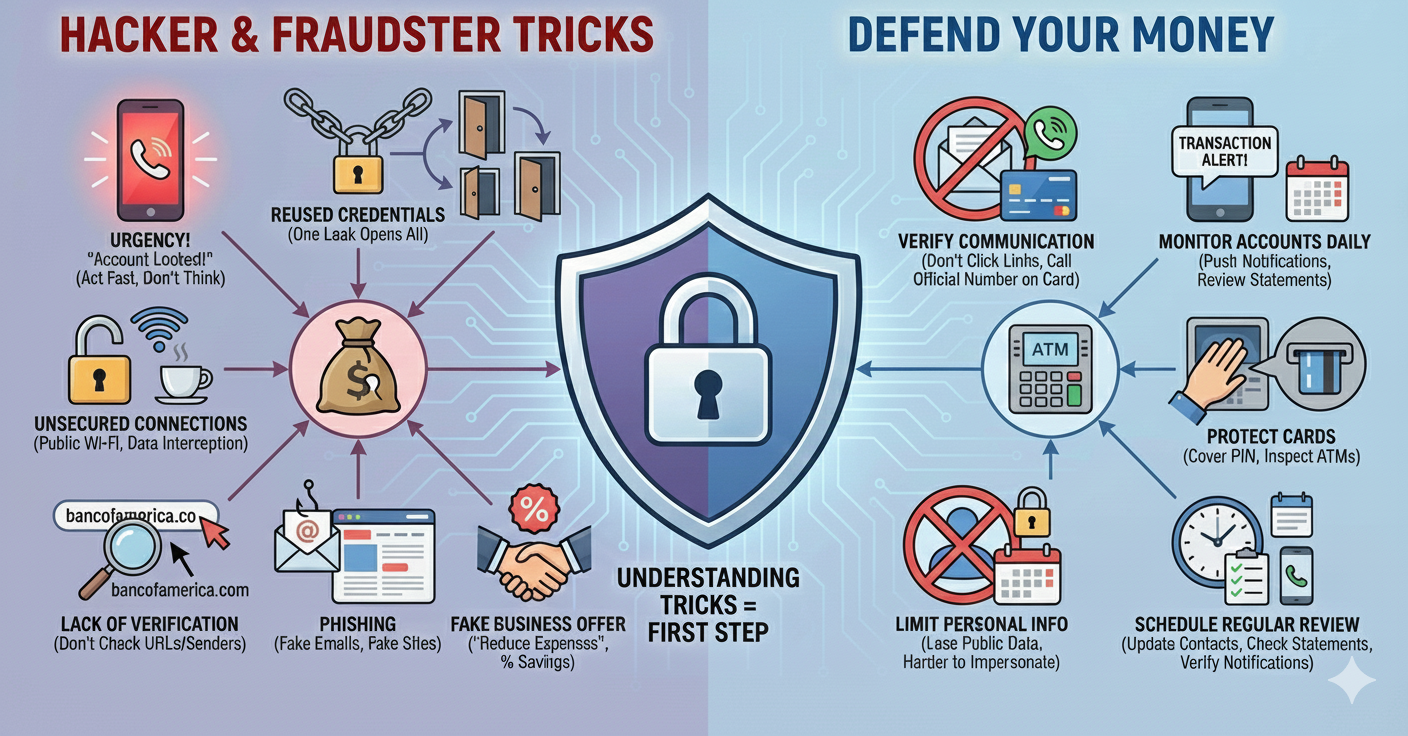

January 8, 2026How do I Defend My Money as a Small Business.

January 15, 2026We explain the difference between a Recovery Drive and a Windows Installation Drive.

February 2, 2026What are three different ways to backup my Windows computer?